High Performance Computing

HPC is a combination of powerful computers

HPC is a combination of powerful computers and advanced techniques to solve complex problems or process large amounts of data at incredibly fast speeds. These systems employ parallel processing, where multiple processors work together simultaneously, to achieve remarkable computational power.

The main purpose of the HPC projects is to find the most effective and efficient way to allow faculty the ability to calculate large amounts of data. Finding solutions and methods that will organize and summarize the data in a way for faculty to easily access and understand.

Supercharge

In simpler terms, imagine HPC as a supercharged engine for computing tasks. Just as a powerful car can accelerate quickly and handle challenging terrain with ease, HPC systems can tackle complex calculations or data analysis tasks quickly and efficiently. This speed and efficiency enable researchers and professionals to accomplish tasks that would be impractical or impossible with conventional computing resources.

CPU vs. GPU Cores Difference

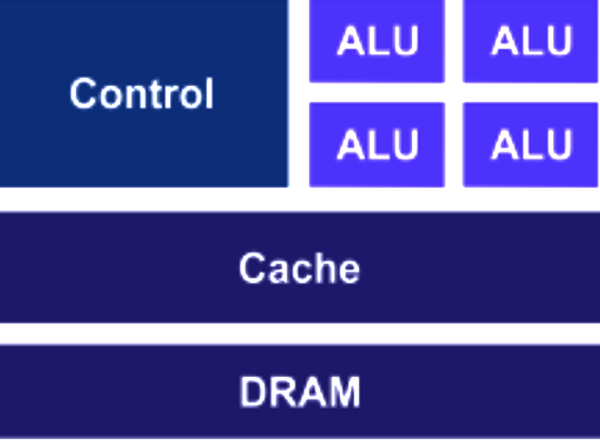

CPU

CPUs have few strong cores

Suited for serial workloads

Designed for general purpose calculations

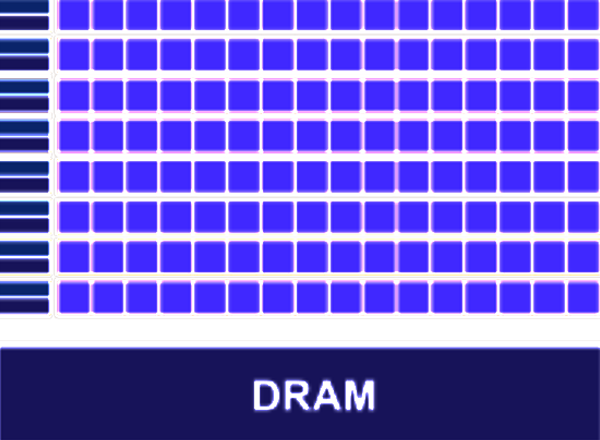

GPU

GPUs have thousands of weaker cores

Suited for parallel workloads

Specialize in graphic processing

CPU vs. GPU Architecture Difference

CPU

Low compute density

Complex control logic

Large caches (L1$/L2$, etc.)

Optimized for serial operations

Shallow pipelines (<30 stages)

Low latency tolerance

Newer CPUs have more parallelism

GPU

High compute density

High computations per Memory Access

Built for parallel operations

Deep pipelines (hundreds of stages)

High throughput

High latency tolerance

Newer GPUs have better flow control logic (becoming more CPU-like)

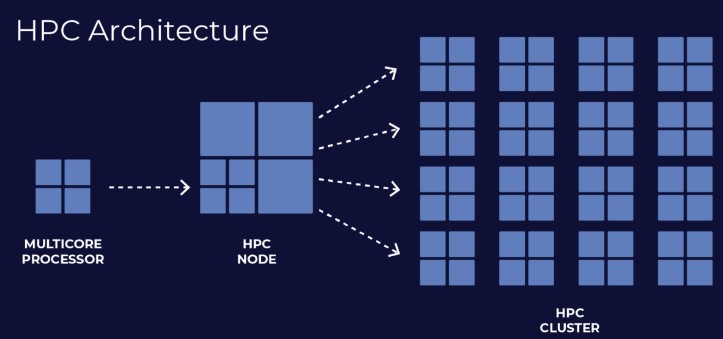

HPC Architecture

HPC architecture is not limited to any set number of clusters. Instead, the number of clusters is defined by the processing power necessary to solve a particular problem.

To build a high-performance computing architecture, compute servers are networked together into a “cluster”. Software programs and algorithms are run simultaneously on the servers in the cluster. The cluster is networked to the data storage to capture the output. Together, these components operate seamlessly to complete a diverse set of tasks.

To operate at maximum performance, each component must keep pace with the others. For example, the storage component must be able to feed and ingest data to and from the compute servers as quickly as it is processed. Likewise, the networking components must be able to support the high-speed transportation of data between compute servers and the data storage. If one component cannot keep up with the rest, the performance of the entire HPC infrastructure suffers.

Calculation of Data

HPC calculates large amounts of data using nodes. These nodes contain many CPU/GPU’s, RAM, and very fast drives to crunch a tremendous amount of data. The HPC is a cluster of computers, so instead of a faculty member using only one CPU with a few cores, the HPC nodes provide access to thousands of cores to focus on the problem at hand.

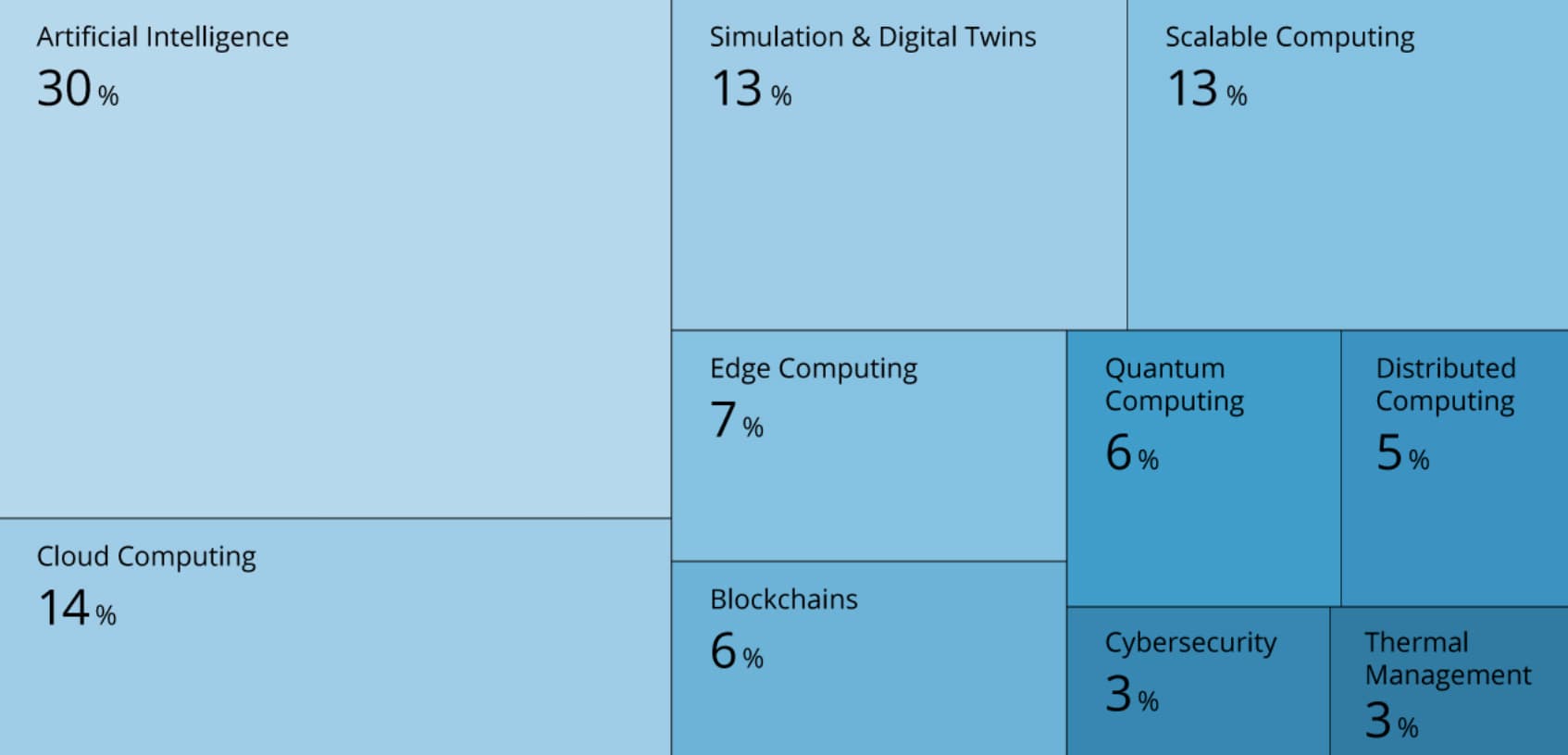

Impact of the Top 10 HPC Trends 2024

AI addresses the challenges in rapid algorithm testing and validation with scalable and cloud computing expands computational capabilities while improving accessibility. Besides, innovations in blockchain and cybersecurity make data processing over HPC networks secure and traceable. Further, edge computing and digital twins enable simulations and analytics of more complex processes. Innovations in distributed computing are overcoming many of the limitations of HPC data centers while thermal management technologies are improving the carbon footprint of HPC. Lastly, quantum algorithms improve the performance of classical HPC systems by providing more efficient ways to solve specific problems.

Maximum Density and Efficiency for Cloud and HPC with X13 Twin Systems

Supermicro is the undisputed leader in dense-yet-efficient Twin architectures, with BigTwin®, GrandTwin™ and FatTwin® bringing multi-node compute for a range of workloads from HPC and Cloud to EDA and the Edge. Host Bob Moore talks to GM, System Product Management, Bill Chen and Principal Product Manager, Josh Grossman about the benefits of multi-node architectures powered by 4th Gen Intel® Xeon® Scalable processors for performance, security and green computing.

Experience the Power: 6 Transformative Benefits of HPC

High Performance Computing (HPC) allows for extremely fast processing speeds, enabling complex calculations and simulations to be completed in a fraction of the time compared to traditional computing systems.

With HPC, tasks that would take weeks or months on conventional systems can be completed in a matter of hours or days, leading to significant improvements in productivity for research, engineering, and other data-intensive fields.

HPC enables higher-resolution simulations and analyses, leading to more accurate results in scientific research, weather forecasting, financial modeling, and other applications where precision is crucial.

While HPC systems may require significant upfront investment, they often offer better cost efficiency in the long run by reducing the time and resources needed to complete tasks, thus lowering overall operational expenses.

Organizations that leverage HPC gain a competitive edge by being able to deliver faster insights, develop more sophisticated models, and make data-driven decisions more quickly than their competitors.

In industries such as finance, healthcare, and manufacturing, HPC enables real-time analysis of large volumes of data, allowing organizations to make informed decisions quickly and respond rapidly to changing conditions or market trends.

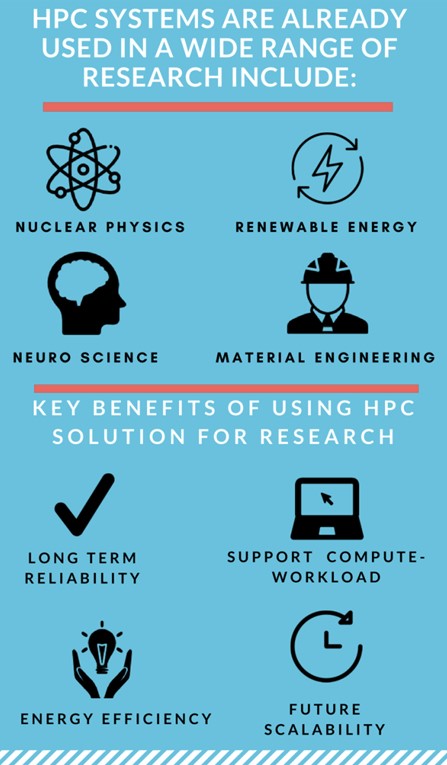

Empowering Innovation: The Role of HPC in Advancing Science and Industry

HPC is utilized across various fields such as scientific research, weather forecasting, financial modeling, and engineering simulations. For example, in scientific research, HPC helps analyze massive datasets from experiments, simulate complex physical processes like climate dynamics or molecular interactions, and model astrophysical phenomena.

Overall, HPC enables breakthroughs in scientific discovery, innovation in various industries, and advancements in technology by providing the computational muscle needed to solve complex problems and process large amounts of data in record time.

Discover Your Perfect Data Management Fit with Our Tailored Solutions

Interested in harnessing the power of CPU computing for your projects? Our sales team is ready to assist you. Contact us to explore how our CPU Computing solutions can transform your computational capabilities.

High Performance Computing Solutions

Schrijf in voor onze Nieuwsbrief

Hebt u vragen of hulp nodig? Wij helpen u graag.