Just a Bunch Of Disks

Simplify and Expand: The Seamless Nature of JBOD Storage

JBOD, standing for "Just a Bunch Of Disks," is a straightforward storage strategy designed to merge multiple hard drives or solid-state drives (SSDs) into one logical unit. Unlike the RAID system, which aims at data redundancy or performance enhancement through data striping, JBOD treats each drive as a separate entity, allowing the operating system to recognize them as individual storage units.

This storage method enables each drive within the JBOD setup to function

independently, with data stored sequentially across the drives.

Consequently, if a drive fails, only the data on that particular drive

is compromised, leaving the remainder secure. However, the absence of

built-in redundancy means JBOD might not be ideal for scenarios

demanding high data integrity and fault tolerance.

A Blend of Simplicity, Cost efficiency & Adaptability

JBOD's blend of simplicity, cost efficiency, and adaptability makes it suitable for specific scenarios, especially where its benefits align with organizational storage strategies and needs.

- Cost Savings: JBOD setups are less expensive than complex RAID systems, avoiding specialized hardware and extra processing.

- Flexible and Scalable Storage: Easily mix drives of various sizes and brands, perfect for evolving storage demands.

- Ease of Setup and Management: JBOD arrays are simple to configure and manage, offering straightforward disk management.

- Full Capacity Utilization: Utilize every bit of storage available on each disk, maximizing your storage capacity.

- Selective Data Accessibility: If a disk fails, the rest remain unaffected, ensuring continuous access to unaffected data.

JBOD Solution

Head Node

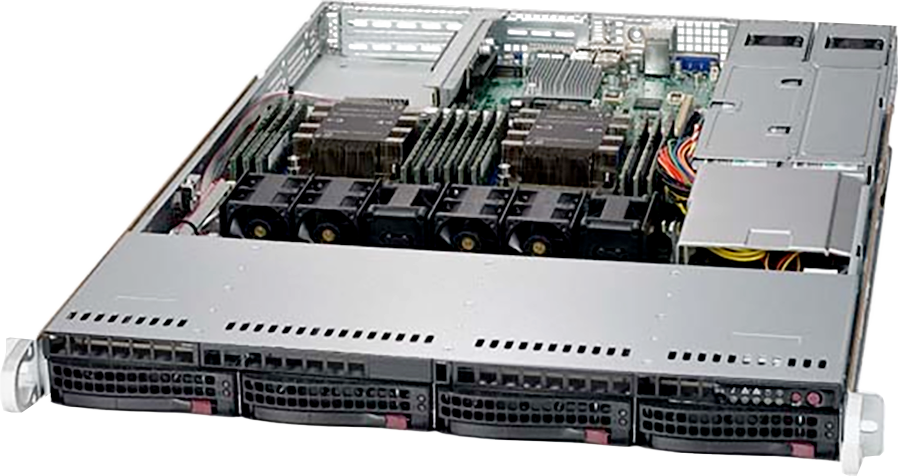

SuperMicro SuperServer 6019P-WTR

Ideal use cases: Web Server, Firewall Apps. Corporate-Wins, DNS, Print, Login Gateway, provisioning servers, Compact Network Appliance, Cloud Computing.

JBOD

J4078-0135X, a 4U 108-Bay 12Gb/s SAS JBOD, ideal for high-availability storage and high performance appliance. This 4U 12Gb/s SAS JBOD enclosure with single or dual expanders supports 108 x 3.5” drives.

Benefits of Modern CPUs in Computing

- Flexibility: Versatile, capable of handling a wide range of tasks and multitasking efficiently.

- Speed: For operations involving RAM data processing, I/O operations, and managing the operating system, CPUs often outperform GPUs.

- Precision: Higher precision in mid-range mathematical operations, crucial for various applications.

- Cache Memory: With substantial local cache, CPUs efficiently manage extensive sequences of instructions.

- Compatibility: Unlike GPUs, which may need specific hardware support, CPUs fit universally across motherboards and system architectures, ensuring broad hardware compatibility.

Maximizing AI Efficiency: Navigating the Unique Strengths of CPUs and GPUs

CPUs and GPUs each bring unique strengths to AI projects, tailored to different types of tasks. The CPU, serving as the computer's central command, manages core speeds and system operations. It excels at executing complex mathematical calculations sequentially, but its performance may dip under heavy multitasking.

For AI, CPUs fit specific niches, excelling in tasks that require sequential processing or lack parallelism. Suitable applications include:

- High-memory recommender systems

- Large-scale data processing, like 3D data analysis

- Real-time inference and ML algorithms that resist parallelization

- Sequentially dependent recurrent neural networks

Collaboration CPU & GPU

High Performance Computing (HPC) utilizes technologies for large-scale, parallel computing. Modern HPC systems increasingly incorporate GPUs alongside traditional CPUs, often combining them within the same server for enhanced performance.

These HPC systems employ a dual root PCIe bus design to efficiently manage memory across numerous processors. This setup features two main processors each with its own memory zone, dividing the PCIe slots (often used for GPUs) between them for balanced access.

Key to this architecture are three types of fast data connections:

- Inter-GPU Connection: Uses NVlink for rapid GPU-to-GPU communication, allowing multiple GPUs to function as a single, powerful unit.

- Inter-Root Connection: Facilitates communication between the two processors via high-speed links like Intel's Ultra Path Interconnect (UPI).

- Network Connection: Employs fast network interfaces, typically Infiniband, for external communications.

This dual-root PCIe configuration optimizes both CPU and GPU memory usage, catering to applications that demand both parallel and sequential processing capabilities.

GIGABYTE

HPC/AI Server

5th/4th Gen Intel® Xeon® Scalable - 5U DP HGX™ H100 8-GPU

NVIDIA HGX™ H100 with 8 x SXM5 GPUs

900GB/s GPU-to-GPU bandwidth with NVLink® and NVSwitch™

5th/4th Gen Intel® Xeon® Scalable Processors

Intel® Xeon® CPU Max Series

The Indispensable Role of CPUs in AI Development

While the majority of AI applications today leverage the parallel processing prowess of GPUs for efficiency, CPUs remain valuable for certain sequential or algorithm-intensive AI tasks. This makes CPUs an essential tool for data scientists who prioritize specific computational approaches in AI development.

For machine learning and AI applications, the Intel® Xeon® and AMD Epic™ CPUs are recommended for their reliability, ability to support the necessary PCI-Express lanes for multiple GPUs, and superior memory performance in the CPU domain, making them ideal choices for demanding computational tasks.

Schrijf in voor onze Nieuwsbrief

Hebt u vragen of hulp nodig? Wij helpen u graag.